Should we just be building more datasets?

There are datasets we can build to train AI systems to make wiser decisions and be easier to oversee.

It has been a good five years for the Bitter Lesson. General methods leveraging computation have largely swept away the domain-specific hacks and tricks that defined many AI subfields for decades. Do any good counterarguments or caveats still stand? I’ll call my favorite “The Engineer’s Consolation”:

For most tasks, there exists some combination of general methods and domain-specific hacks that outperforms general methods alone—at least for a while

Michael Nielsen offers a version of this argument, noting that Deep Blue—which the original Bitter Lesson essay pointed to as an example of the power of general methods—actually incorporated extensive feature engineering. Deep Blue’s hybrid approach didn’t stand the test of time, but there was a period of time when integrating a large number of expert human heuristics with a powerful general system was the best strategy for achieving the highest chess performance.

This is cold comfort for researchers invested in specialized, domain-specific algorithms: ultimately, the general approach wins. You could also say it’s obvious in an uninteresting way: we shouldn’t be surprised if there are usually tweaks around the edges that improve performance on a specific task while leaving the bulk of the heavy lifting to general methods. This is especially true if we consider fine-tuning or in-context learning on a nontrivial number of examples to count. It may feel strange to call fine-tuning on a domain-specific corpus a “domain-specific hack” — fine-tuning is, in some sense, an extremely general method — but the point is that there's usually a way to perform extra work to optimize a general method for a particular task or area.

Tasks Where Fine-Tuning and In-Context Learning Probably Help

What are some tasks where general methods are likely to struggle, but are also likely to benefit from fine-tuning or prompting with an appropriate task-specific corpus? Strong contenders include any task requiring a deep or expert-level underlying conceptual model of a specific domain, where the domain is complex relative to the amount of information about it in the training data, but bounded enough that a useful task-specific corpus for fine-tuning or prompting can actually be constructed. Examples include:

Writing reinforcement learning pipelines for training simulated quadrupeds to perform navigation tasks in simulated environments

Constructing statistical models to predict the impact of climate change on rainfall within specific ecosystems

Generating testable hypotheses that could resolve controversies about hippocampal function

Creating detailed logistical plans for game studios to produce games with specific parameters (feature set, team size, technology stack) within a given timeline

I’ve purposefully limited each of these skills to narrow domains. With adequate time and effort, it's plausible that LLM-savvy domain experts could curate a set of examples and field-specific information to substantially enhance the capabilities of a frontier model in these areas through targeted fine-tuning and prompting. While it might not be worth the effort to enhance performance in such narrow domains, and the models might not achieve human expert levels, it would be surprising if they couldn't outperform a naïve zero-shot prompt.

These examples are sufficiently constrained that assembling an effective task-specific dataset for fine-tuning or prompting seems feasible. However, let’s say that we’re interested in a more general set of abilities around having the level of judgment, discernment and foresight necessary to minimize the probability of catastrophic failures. Specifically, I’m thinking here about capabilities that we would want a system to be really good at if its primary job was to monitor and evaluate the output of powerful, potentially deceptive systems (although they are also capabilities that we might want a broader range of systems to have as well, if we could be confident that these systems were robustly aligned with human values). These would presumably include:

error detection: identifying false statements or outright errors

misleading argument detection: identifying arguments which mislead without including false statements, such as reporting facts that seem to support the conclusion and omitting facts that don’t

cumulative error recognition: the ability to recognize that an error has occurred even if the exact location of an incorrect claim cannot be pinpointed (for example, by identifying contradictions between the implications of earlier and later claims of a complex argument)

negative externality identification: ability to recognize side effects or important negative externalities of following a proposed plan or following proposed advice

other important capabilities: the preceding bullet points certainly aren’t intended to be comprehensive. It may be that most of the value of the ‘building datasets to train for wisdom-relevant skills’ approach comes from training for specific wisdom-relevant skills not described here.

To give this cluster of capabilities a label (and, if we’re being honest, to clarify the relevance of this piece to a particular essay contest which defines “wisdom” roughly as “thinking/planning which is good at avoiding large-scale errors”), let’s call these ‘wisdom-relevant skills’. Are these the kinds of capabilities that fine-tuning or in-context learning can help with? I suspect the answer is “yes, to some degree”, and there’s some research nodding in this direction that I’ll mention in a moment. But there’s a lot of low-hanging fruit here still to be picked.

Tasks Where It Would Be Good to Know How Much Fine-Tuning and In-Context Learning Actually Help

Consider our previous example of a model fine-tuned to generate testable hypotheses about hippocampal function. If we broadened the input to do the same for a range of other topics within other scientific fields, how good would the resulting system be at hypothesis generation in general? Similarly with respect to wisdom-relevant skills, we might ask: Are there latent skills of “identifying errors”, “detecting misleading arguments”, “cumulative error recognition”, etc. that can be reliably elicited by providing enough examples of doing these tasks well across several different domains? If so, how successfully can we elicit these skills and how well do they generalize? These questions seem unanswered and empirically answerable. A few project ideas:

Collating existing datasets useful for training & evaluating systems’ abilities to detect outright errors and misleading arguments, creating new such datasets, and conducting experiments to determine the degree to which fine-tuning on such datasets generalizes across domains. Scalable oversight strategies such as debate and critique require models to be really good at identifying problems with arguments, particularly in cases where errors might be subtle or misleading. As such, scalable oversight research, as well as as some research concerned with reliability more broadly, has already produced a number of datasets that could be useful for training & evaluating systems’ abilities to detect outright errors and misleading arguments. See for example Saunders et al. 2022, Lightman et al. 2023 (PRM800K), Kenton et al. (2024)’s modification of PrOntoQA, bug detection benchmarks such as PyTraceBugs and BugHunter, and hallucination benchmarks such as Autohall, HaluEval, Med-HALT, and HalOmi. This is great, though there’s definitely room for more dataset-building work here. For example, of the datasets mentioned, only PRM800K and Kenton et al.’s modification of PrOntoQA contain annotations of errors in claims that proceed from premises to conclusion—a key error type that we would like debate models to detect—and are limited to mathematics and logic respectively. One could imagine creating a large number of datasets illustrating successful error identification across a wide range of domains, using this to curate a well-designed fine-tuning corpus or multi-shot prompt, and testing whether this yields improvements in error detection ability that generalize to domains not represented in the prompt or fine-tuning corpus. Even just collating or surveying relevant datasets from the literature that could be used to train and evaluate models across many different error detection contexts seems like a worthwhile project. For example, it may turn out that a model is very good at identifying errors in formal domains like mathematics and logic, but that this skill does not generalize well to hard problems in less structured domains like philosophy or economics. If so, this would be really important to know before we put too much stock in models’ ability to self-critique on topics that are beyond our ability to evaluate well. Novel datasets in which subtle errors in step-by-step arguments have been annotated across many domains and difficulty levels could also enable researchers to more fully explore model strengths and limitations in this regard.

Additionally, while it makes sense that Lightman et al. 2023 and similar work focus on detecting the specific location of the initial error in the context of mathematical reasoning, there are domains where this might run into practical difficulties distinguishing between ambiguously stated but fundamentally sound claims vs. technically correct but misleading statements that set the stage for more serious errors. A language model’s ability to identify misleading (but not necessarily explicitly incorrect) statements in arguments could end up being an important skill to train and evaluate.

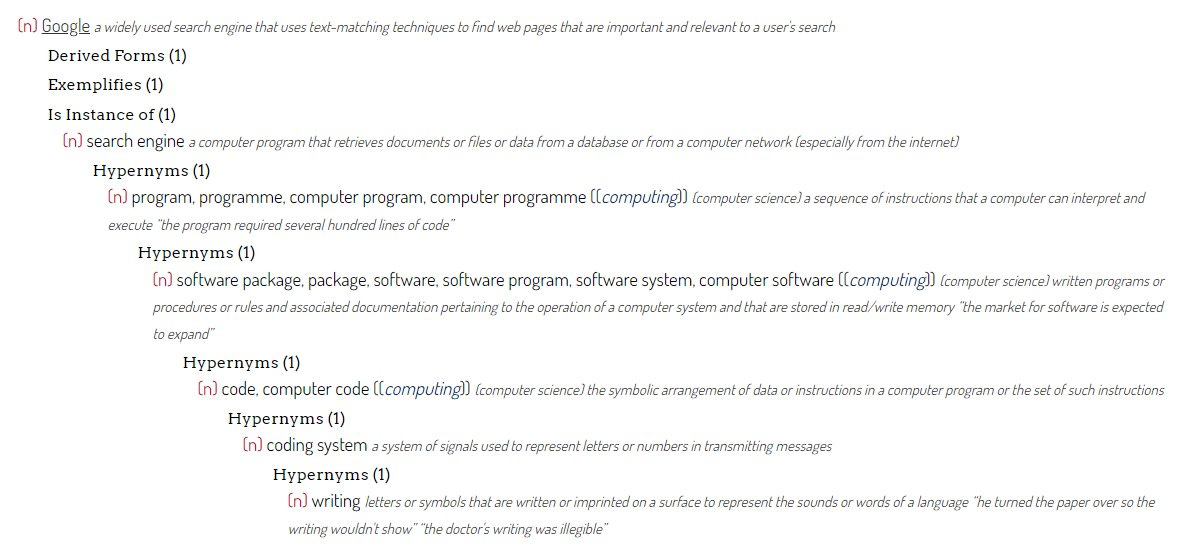

Building datasets for cumulative error recognition. One thing that can make evaluation difficult is that individual steps of an argument, plan, etc. can seem reasonable when divorced from the context of the entire chain of reasoning, but may nevertheless contain subtle, hard-to-detect errors. If enough of these slight errors add up, or if the impact of a single slight error accumulates as it is propagated forward, it can lead to incorrect or even absurd conclusions. Examples include the obfuscated arguments problem and my favorite WordNet hypernym chain.

Being able to recognize when subtle errors have accumulated has occurred seems important for avoiding errors of this kind. Once again, there is a lot of work to be done to acquire relevant training and evaluation data across a wide range of domains. Exactly what form this data should take is part of the research that needs to be done here, but I’m imagining something along the lines of:

Identify many cases of arguments/proofs/explanations where an error or misleading argument leads to an incorrect conclusion, and where the issue is subtle enough that leading LLMs don’t find the issue. These could be pulled from some of the datasets mentioned in the previous section, or from cases explicitly designed by humans to contain difficult-to-detect errors. Some training data could also be automatically generated from questions where we already know the correct answer: ask an LLM to introduce subtle errors into many known-to-be-correct arguments/proofs/explanations and to tweak them so that they seem to support incorrect conclusions; find the subset of these cases where the LLM is fooled by its own misleading arguments.

Having done this, try to figure out ways for each specific case that the LLM might be able to at least realize that there is an error, even if it’s unclear on where the error is (or explore prompts for getting the LLM to figure out such ways on its own). For example, after solving an equation for x, you can plug the value you came up with back into the original equation and see if the equality still holds. If it doesn’t, then you know you made a mistake, even though you don’t know where the mistake was.

Fine-tune on examples of successfully applying such methods to detect that an error has occurred when an error is present (and to fail to detect that an error has occurred, when no error is present) to see if you can elicit an improved capability for identifying when something has gone off the rails. Conduct experiments to see how well this generalizes across domains and difficulty levels.

Building datasets for negative externality identification. I’m not sure how best to go about this in the general case; I’d love for people to think more about this. A dataset of detailed business plans with steps containing hidden externalities (e.g., some step of the plan involves buying up X amount of some rare mineral, which sounds reasonable until you realize that this is close to the global supply, and would have very negative consequences for the availability and affordability of certain cancer treatments, etc) seems like a decent example of the sort of thing that might be valuable here.

Other important capabilities. The scope of wisdom-relevant skills extends beyond these examples, and uncovering other such capabilities could be plausibly be more valuable than addressing the specific ones discussed above. I'd be excited about research focused on understanding what these skills might look like and how to construct datasets to train and test them. Other possible directions worth exploring could include recognizing claims in an argument that, if incorrect, would propagate errors forward (as opposed to claims that would have little impact on the conclusion), recognition of fragile or implicit assumptions, or breaking "negative externality identification" into more tractable sub-areas such as "recognizing who might be affected by a decision beyond the obvious, direct stakeholders", "assessing the likelihood of secondary and tertiary effects", etc.

Should we do this?

Building out datasets and evaluations focused on wisdom-relevant skills could significantly contribute to our ability to make progress on scalable oversight and avoid large-scale errors more broadly. This work is relatively accessible, not requiring insider access to unpublished frontier models, specialized ML experience, or extensive resources. In principle, many individuals can contribute to creating datasets and evaluations of this kind.

Of course, these skills also represent capabilities that are concerning if misused by systems (or system operators) with goals contrary to our own. This is beyond the scope of this essay, but my current position is that (i) to the extent that models already possess these abilities latently, such that they can be elicited by methods as simple as fine-tuning and prompting, a significant portion of the associated risk is already present; and (ii) the contribution that harnessing these abilities would make to scalable oversight would likely be net-positive, as it would increase the probability that scalable oversight methods can help us navigate a critical risk period in which sufficiently specialized 'savant' models—trained to be superhuman at particular alignment-relevant tasks, without superhuman general intelligence—actually can "do our alignment homework".

I’m uncertain about this position, and can imagine circumstances where it could go wrong. For example, if a cumulative error recognition dataset were to be developed, and if it turned out that this dataset were to greatly improve an LLM’s general planning abilities when included as part of the training pipeline, then I might worry that this situation is no longer well-described as “eliciting a latent capability”, and rather could be adding a new capability in a way that could increase risk. However, to the extent that we can make the best use of the wisdom-relevant capabilities latent within existing models with low risk of introducing risky capabilities into future models, I’m fairly confident that we should do so.

Postscript: I’ve been dedicating some of my own time and research funding to building out some small but high-quality datasets which I hope will be useful for training & evaluating monitoring systems’ abilities to detect outright errors and misleading arguments, and for scalable oversight research more broadly. To be clear, this project is just one small example of the type of work described in this essay; it’s certainly not intended to comprehensively address the vision described. This project is described in more detail on the Github repo, where the datasets should appear within the next few months.

To be notified when these datasets become available, you can either subscribe to this Substack or enter your e-mail here to receive a one-time message when the datasets are released.

Claude Sonnet and GPT-4 assisted with word choice and helped revise for style and readability, suggested the example of regional climate prediction in the first set of bullet points, and helped brainstorm on ‘other important capabilities’.